regularization machine learning meaning

Of course the fancy definition and complicated terminologies are of little worth to a complete beginner. It is a form of regression that shrinks the coefficient estimates towards zero.

Regularization In Deep Learning L1 L2 And Dropout Towards Data Science

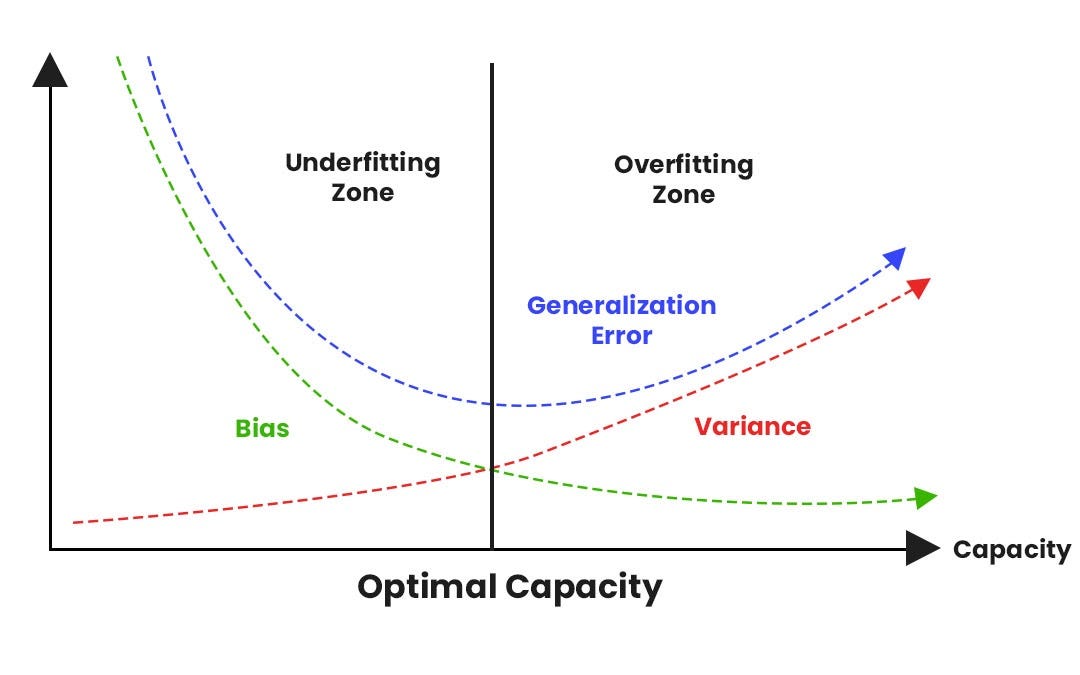

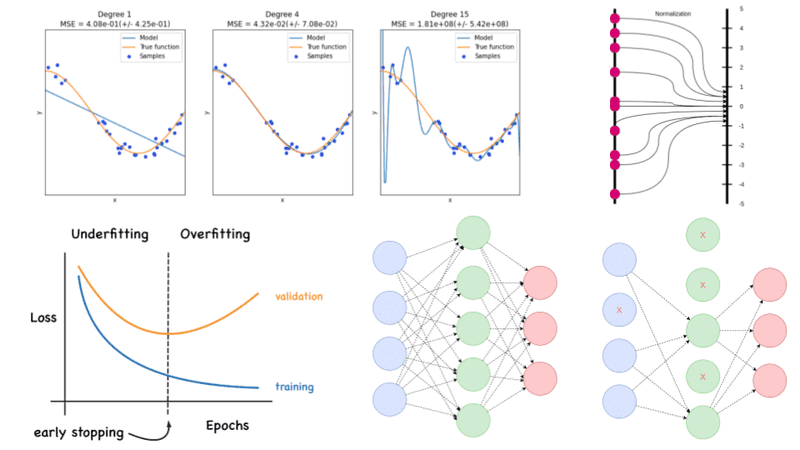

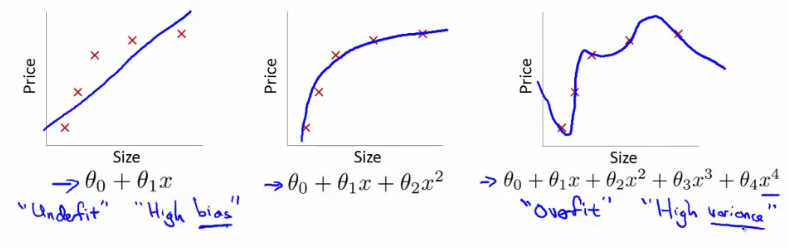

Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

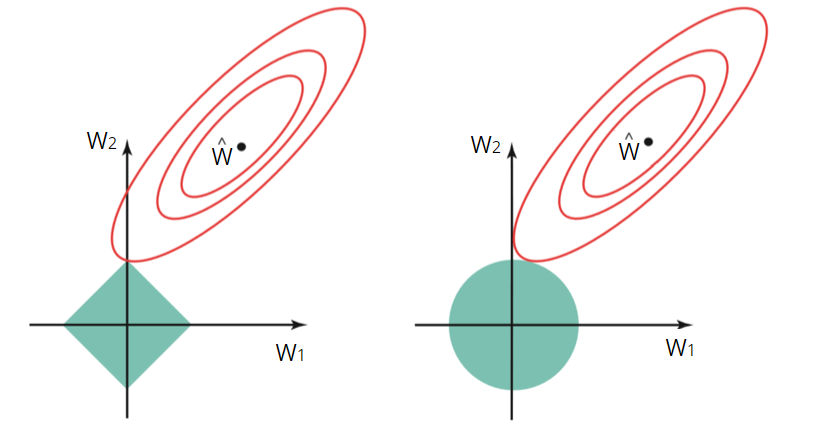

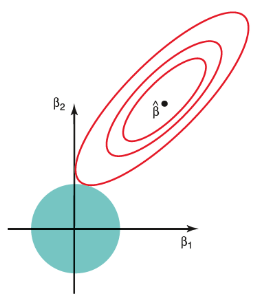

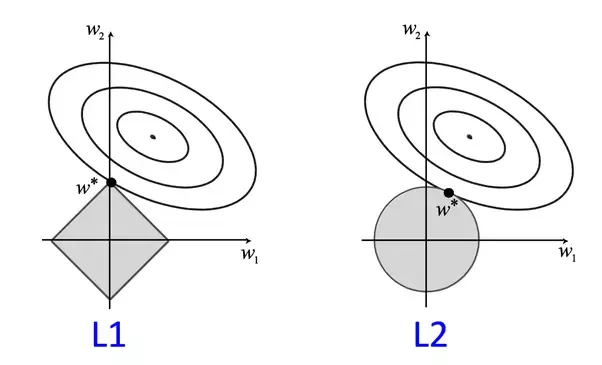

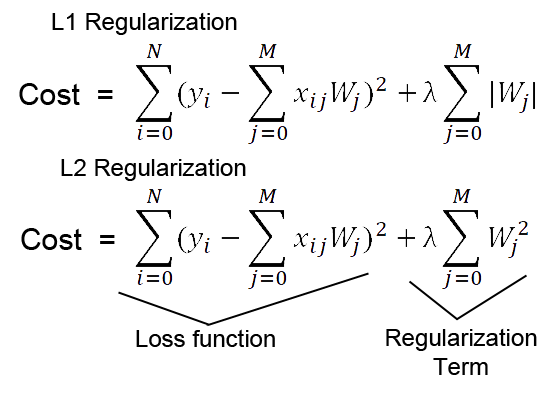

. Regularization is one of the most important concepts of machine learning. Complexity sum of squares of weights Combine with L 2 loss to get ridge. Regularization This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero.

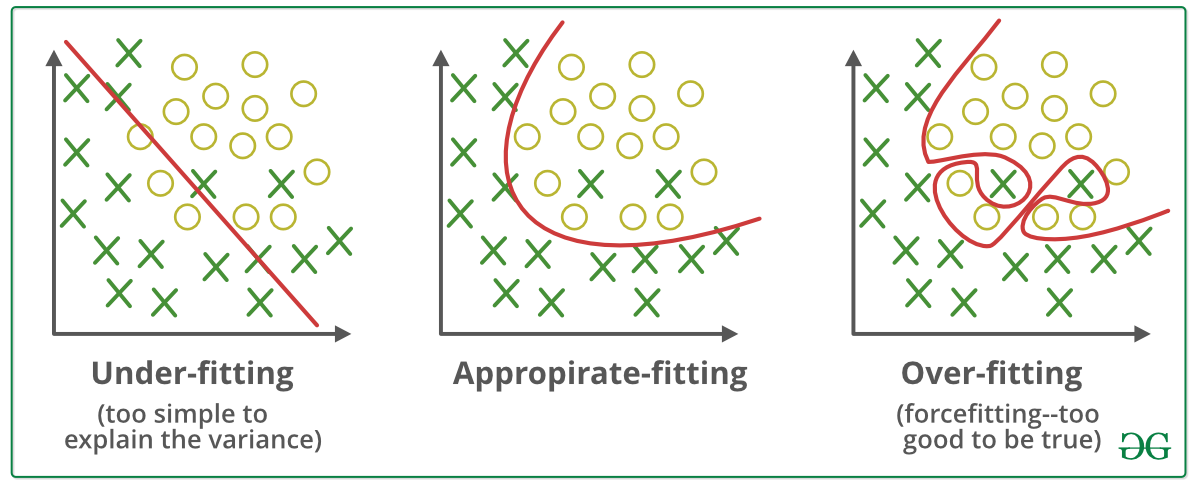

Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. When you overfeed the model with data that does not contain the capacity to handle it starts acting irregularly. This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero.

Sometimes one resource is not enough to get you a good understanding of a concept. The commonly used regularization techniques are. Commonly used techniques to.

First lets understand why we face overfitting in the first place. Regularization in Machine Learning What is Regularization. The formal definition of regularization is as follows.

In general regularization involves augmenting the input information to enforce generalization. In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting. Overfitting is a phenomenon which occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data.

L1 regularization L2 regularization Dropout regularization This article focus on L1 and L2 regularization. It reduces by ignoring the less important features. This helps to ensure the better performance and accuracy of the ML model.

In simple terms regularization. Over Fitting Overfitting is a common problem. Regularization Reduces overfitting by adding a complexity penalty to the loss function L 2 regularization.

This technique prevents the model from overfitting by adding extra information to it. When you train a machine learning. What is Regularization.

A simple relation for linear regression looks like this. In the context of machine learning the term regularization refers to a set of techniques that help the machine to learn more than just memorize. Regularization is a technique which is used to solve the overfitting problem of the machine learning models.

For instance if you were to model the price of an apartment you know that the price depends on the area of. Definition Regularization is the method used to reduce the error by fitting a function appropriately on the given training set while avoiding overfitting of the model. Answer 1 of 37.

By useless datapoints we mean that the. Regularization is a type of regression which solves the problem of overfitting in data. It is also considered a process of adding more information to resolve a complex issue and avoid over-fitting.

It is a technique to prevent the model from overfitting by adding extra information to it. It also helps prevent overfitting making the model more robust and decreasing the complexity of a model. This is exactly why we use it for applied machine learning.

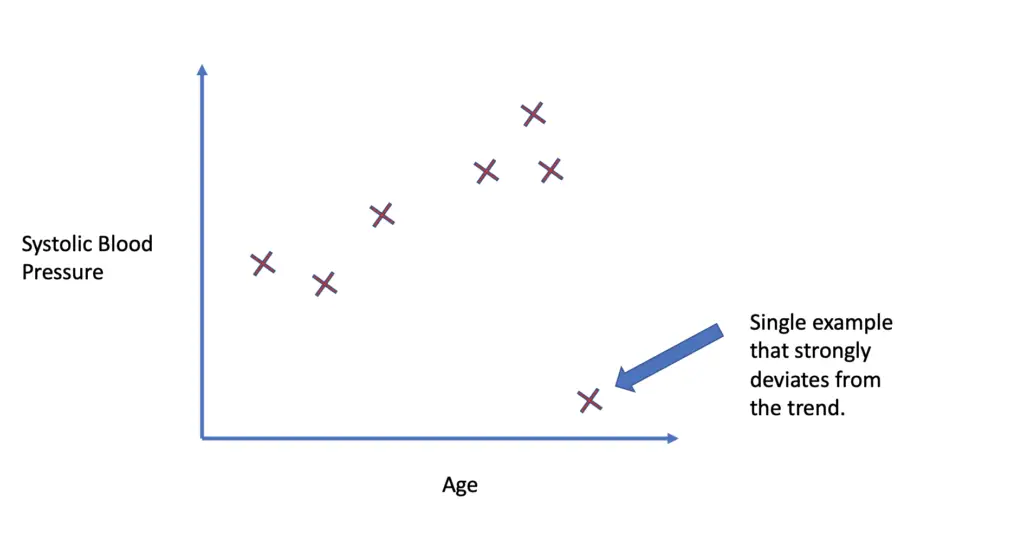

This happens when the ML model includes useless datapoints as well. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. For any machine learning problem essentially you can break your data points into two components pattern stochastic noise.

Also it enhances the performance of models for new inputs. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small. Before we explore the concept of regularization in detail lets discuss what the terms learning and memorizing mean from the perspective of machine learning.

Regularization in Machine Learning is an important concept and it solves the overfitting problem. What is Regularization. Advantage of Normalization over Standardization is that we are not bound to any specific distribution.

Regularization means making things acceptable or regular. It means the model is not able to. It is very important to understand regularization to train a good model.

It is one of the most important concepts of machine learning. Regularization refers to the modifications that can be made to a learning algorithm that helps to reduce this generalization error and not the training error. Regularization is a technique that reduces error from a model by avoiding overfitting and training the model to function properly.

The concept of regularization is widely used even outside the machine learning domain. Normalization usually means to scale a variable to have a values between a desired range like -11 or 01 while standardization transforms data to have a mean of zero and a standard deviation of 1. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

In general regularization means to make things regular or acceptable. In machine learning regularization is a procedure that shrinks the co-efficient towards zero.

Dropout Early Stopping I Don T Know For You But To Me These By Moodayday Ai Theory Practice Business Medium

What Is Regularization In Machine Learning Techniques Methods

L2 Regularisation Maths L2 Regularization Is One Of The Most By Rahul Jain Medium

Regularization Understanding L1 And L2 Regularization For Deep Learning By Ujwal Tewari Analytics Vidhya Medium

Regularization In Machine Learning Programmathically

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization In Machine Learning Regularization In Java Edureka

Regularization Techniques In Deep Learning Kaggle

Regularization Techniques For Training Deep Neural Networks Ai Summer

Regularization In Machine Learning Geeksforgeeks

An Overview On Regularization In This Article We Will Discuss About By Arun Mohan Medium

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

L1 Vs L2 Regularization The Intuitive Difference By Dhaval Taunk Analytics Vidhya Medium

Regularization In Machine Learning Regularization In Java Edureka

What Is Regularization In Machine Learning

Regularization Techniques In Deep Learning Kaggle

Machine Learning What Should Regularization Loss Look Like Cross Validated

What Is Regularization In Machine Learning Quora

What Is Machine Learning Regularization For Dummies By Rohit Madan Analytics Vidhya Medium